Real Time Spark Project for Beginners: Hadoop, Spark, Docker

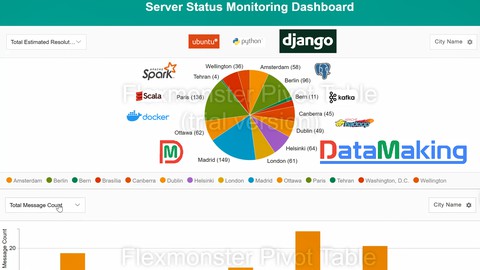

Building Real Time Data Pipeline Using Apache Kafka, Apache Spark, Hadoop, PostgreSQL, Django and Flexmonster on Docker

What you’ll learn

-

Complete Development of Real Time Streaming Data Pipeline using Hadoop and Spark Cluster on Docker

-

Setting up Single Node Hadoop and Spark Cluster on Docker

-

Features of Spark Structured Streaming using Spark with Scala

-

Features of Spark Structured Streaming using Spark with Python(PySpark)

-

How to use PostgreSQL with Spark Structured Streaming

-

Basic understanding of Apache Kafka

-

How to build Data Visualisation using Django Web Framework and Flexmonster

-

Fundamentals of Docker and Containerization

Requirements

-

Basic understanding of Programming Language

-

Basic understanding of Apache Hadoop

-

Basic understanding of Apache Spark

Who this course is for:

- Beginners who want to learn Apache Spark/Big Data Project Development Process and Architecture

- Beginners who want to learn Real Time Streaming Data Pipeline Development Process and Architecture

- Entry/Intermediate level Data Engineers and Data Scientist

- Data Engineering and Data Science Aspirants

- Data Enthusiast who want to learn, how to develop and run Spark Application on Docker

- Anyone who is really willingness to become Big Data/Spark Developer

![RabbitMQ .NET 6 From Zero To Hero in Arabic [بالعربي]](https://img-c.udemycdn.com/course/750x422/5680322_2a33_4.jpg)